EU AI Act: what does it mean for your business?

By Guillaume Baudour, Consultant, Publyon

The EU Artificial Intelligence Act (EU AI Act), designed to regulate and govern evolving AI technologies, will play a crucial role in addressing the opportunities and risks for AI within the EU and beyond.

Over just a few years, AI-powered technology, such as ChatGPT, Bard, Siri, and Alexa, have made artificial intelligence (AI) become deeply ingrained in our society. This rapidly evolving technology brings significant opportunities across various sectors, including healthcare, education, business, industry, and entertainment.

However, growing concerns revolve around the potential risks of unregulated AI on fundamental rights and freedoms. These concerns have become especially pertinent considering the upcoming elections in prominent Member States and the European Parliament.

Table of contents

3. The EU AI Act and the EU GDPR

4. The objectives of the EU AI Act

5. The classification of the AI systems

6. The latest updates on the EU AI Act

7. Implications for your business

What is the EU AI Act

At the forefront of the digital frontier, the EU’s paramount concern is to ensure that AI systems within its borders are employed safely and responsibly, warding off potential harm across various domains. That’s precisely why, in April 2021, The European Commission introduced a pioneering piece of legislation – the EU AI Act – marking the first-ever regulatory framework for AI in Europe.

This step is just one element of a broader AI package that additionally includes a Coordinated Plan on AI and a Communication on fostering a European approach to AI. The EU AI Act is the first attempt to create a legislative framework for AI aimed at addressing the risks and problems linked to this technology by, among other things:

- Enhancing governance and enforcement of existing laws on fundamental rights and safety requirements to ensure its ethical and responsible development, deployment, and use.

- Introducing clear requirements and obligations for high-risk AI systems, users and providers.

- Facilitating a Single Market for AI to prevent market fragmentation and lessen administrative and financial burdens for businesses.

The end goal is to make the European Union a global, trustworthy environment in which AI can thrive.

AI on the global scene

This regulatory process on AI fits into broader global developments. On 9 October, officials of the G7 under the Hiroshima Artificial Intelligence Process drafted 11 principles on the use and creation of advanced AI. These principles aim to promote the safety and trustworthiness of AI, such as generative AI (GAI) like ChatGPT. GAI are AI systems and models which have the ability to autonomously create novel content, such as text, images, videos, speech, music and data.

Moreover, they aim to create an international voluntary Code of Conduct for organisations on the most advanced uses of AI. Moving to the United States, there are contentions on intellectual property (copyright) rights and AI, which will possibly influence AI legislative discussions in the EU. Also, the United Nations, the United Kingdom, China and the other G20 countries are all actively engaged in shaping policies and agreements around AI.

The EU AI Act and the EU GDPR: what is the difference?

The upcoming EU AI Act is a separate legislation from the EU General Data Protection Regulation (GDPR). The GDPR, which became effective on 25 May 2018, standardised data privacy rules across the EU, in particular regarding personal data.

In contrast, the EU AI Act establishes a new legislative framework specifically for AI technologies, focusing on their safety and ethical use. While the GDPR includes obligations for data controllers and processors, the EU AI Act is centred on providers and users of AI systems.

However, the GDPR rules that when AI systems process personal data, they must adhere to the prevailing GDPR principles. This means that businesses employing AI in the EU must comply with GDPR requirements when handling personal data.

Did we tickle your curiosity? Then, keep reading as in this blogpost, Publyon EU will provide you with key insights on:

- The objectives of the legislation

- The relevant implications for businesses

- The latest updates in the EU legislative process

What are the objectives of the EU AI Act?

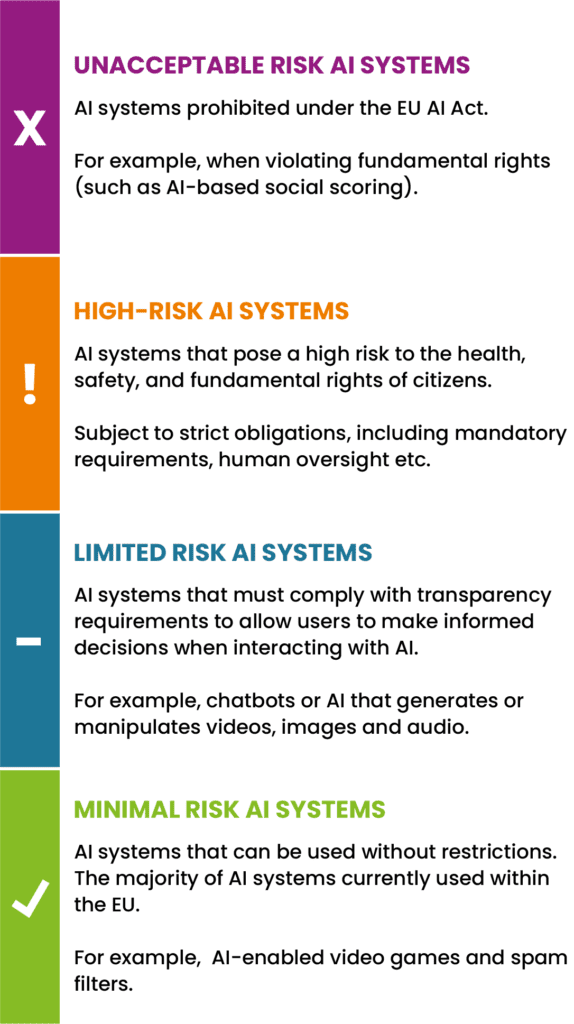

The EU AI Act aims to provide a technology-neutral definition of AI systems and establish harmonised horizontal regulation applicable to AI systems. This regulation is structured around a “risk-based” approach, classifying AI systems into four distinct categories. These categories are defined as “unacceptable risk”, “high-risk”, “limited risk” and “minimal risk”.

Each classified AI system is subject to specific requirements and obligations. A European Artificial Intelligence Board (AI Board) will also be created to enhance oversight at the Union level. The AI Board will consist of representatives from the European Commission and the Member States. Its main responsibilities will include ensuring the EU AI Act’s effective and harmonised implementation by supporting the collaboration of national supervisory authorities with the Commission.

In addition, the AI Board will provide guidance and expertise to the Commission and facilitate best practices among the Member States.

Risk categories: the classification of the AI systems according to the EU AI Act

Unacceptable risk AI systems

AI systems that are labelled as an unacceptable risk will be prohibited under the EU AI Act, for example in the case of violating fundamental rights. They comprise systems which use AI-based social scoring and ‘real-time’ remote biometric identification systems in public spaces for law enforcement (with certain exemptions), and that deploy subliminal techniques to disrupt people’s behaviour to cause harm. These rules are designed to address practices like the social credit system used in certain parts of the world.

High-risk AI systems

High-risk AI systems are those that pose a high risk to the health, safety, and fundamental rights of citizens. These are not only dependent on the function, but also on the specific purpose and modalities for which the AI system is used for. These systems will be subject to strict obligations, including mandatory requirements, human oversight provided by the provider, and conformity assessments. High-risk AI falls under two systems:

- those that fall under the EU’s product safety legislation (including toys, cars, medical devices e.g.)

- those that are used in biometric identification and categorisation of people, critical infrastructure, educational training, safety components of products, employment, essential private and public services and benefits, law enforcement that interferes with citizens’ fundamental rights, migration management, and administration of justice and democratic processes.

Limited risk and minimal risk AI systems

Limited risk AI systems must comply with transparency requirements to allow users to make informed decisions when interacting with AI. They include chatbots, and AI that generates or manipulates videos, images, or audio. Generative AI, such as ChatGPT or Bard, will fall under limited-risk AI but might be subject to additional requirements. The proposal furthermore permits the unrestricted use of minimal-risk AI, which comprises most AI systems currently used within the EU. These systems encompass applications like AI-enabled video games and spam filters.

The latest updates on the EU AI Act

Council of the EU

The Council adopted its position on the EU AI Act in December 2022.

The Council of the EU:

- Narrowed down the definition of AI systems to “systems developed through machine learning approaches and logic- and knowledge-based approaches”;

- Extended the prohibition on using AI for social scoring to private actors;

- Imposed requirements on general-purpose AI systems;

- Simplified the compliance framework;

- Strengthened the role of the European AI Board.

European Parliament

On 14 June 2023, the Parliament adopted its position on the EU AI Act. Most notably, the Members of the European Parliament extended the list of prohibited AI systems and amended the definition of AI systems to align with that of the OECD.

Prohibited AI systems

The European Parliament’s position:

- Fully bans the use of biometric identification systems in the EU for real-time and ex-post use. Exceptions are used in the case of severe crime and pre-judicial authorisations for ex-post use;

- Includes biometric categorisation systems using sensitive characteristics in the prohibitedAI system classification;

- Also includes in this category the predictive policing systems, emotion recognition systems, and AI systems using indiscriminate scraping of biometric data from CCTV footage to create facial recognition databases.

High-risk AI systems

The European Parliament’s position:

- Adds the requirement that systems must pose significant harm to citizens’ health, safety, fundamental rights, or the environment to be considered high-risk;

- Includes systems used to influence voters and the outcomes of elections on the list of high-risk AI systems.

Additionally, the European Parliament wants to facilitate innovation and support for SMEs which might be troubled with the barrage of new rules. Therefore, the Parliament:

- Added exceptions for research activities and components of AI that are provided under open-source licences and promote the use of regulatory sandboxes;

- Wants to set up an AI complaints system for citizens and establish an AI Office for the oversight and enforcement of foundation models and, more broadly, general-purpose AI (GPAI);

- Strengthened national authorities’ competencies.

Lastly, the Parliament also introduced the concept of foundation models in the text and set a layered regulation on transparency requirements, safeguards, and copyright issues.

Foundation models are large machine-learning models, which are pre-trained on large amounts of data sets to learn language patterns, facts and reasoning abilities and generate output based on specific stimuli. GPAI refers to AI systems which can be adapted to a wide range of applications. GPAI, GAI and foundation models are often used interchangeably as the terms overlap and the terminology is still evolving.

Three-way negotiations

With both the Council and the Parliament having adopted their position, the interinstitutional negotiations (trilogues) have now started. The first trilogue of 14 June 2023 was mainly symbolic, while the following proved to be more of substance.

In the trilogue that followed on 18 July the EU Institutions discussed innovation, sandboxes, real-world testing, fundamental rights impact assessment and started with the classification of high-risk AI systems. However, this was just the tip of the iceberg.

Later trilogues on 2-3 October and 24 October were mostly centred around clearing the requirements and obligations for providers of high-risk AI. A new ‘filter approach’ was introduced for the classification for high-risk AI, which would allow exceptions for AI developers to avoid high-risk classification when they would perform “purely accessory” tasks and let them decide whether their AI systems would fall under it. These include:

- A narrow procedural task;

- Merely confirming or improving an accessory factor of human assessment;

- Performing a preparatory task.

A criterion on decision-making patterns that AI systems should not be intended to substitute for or impact a previous human evaluation without “proper human review” was also added to the filter approach. However, AI-systems that use profiling would always be considered high-risk.

Turning to regulatory sandboxes, these were approved with the exception for real-world testing which remains a point of contestation. The EU institutions also made compromises on market surveillance and enforcement, penalties, and fines.

A tiered approach

Additionally, the institutions started shaping a ”tiered approach’ for GPAI, foundation models and “high impact foundation models”. This approach would introduce tighter regulation, with the most powerful ones having a higher potential for systemic risks, such as ChatGPT, and are therefore subject to even stronger market obligations. An AI office would provide centralised oversight. However, the Council and Parliament remained at odds regarding the prohibitions of AI systems and exemptions for law enforcement and national security.

Developments around the EU AI Act continue to evolve quickly. On 6 November it became known that the European Parliament circulated a compromise text scrapping the complete ban on real-time remote biometric identification (RBI) in exchange for concessions on other parts of the file such as a longer list of banned AI applications.

The discussions regarding foundation models and GAI remain a politically charged topic, with concerns that a stalemate might be inescapable. Countries such as France, Italy and Germany are pushing back, fearing overregulation that could kill companies and competition.

What happens next?

The next trilogue is scheduled for 6 December. EU policy makers are intensifying efforts to ensure that the EU AI Act will be closed at the end of this year. The Spanish Presidency of the Council of the EU has underlined the ambition to finalise the file by the end of 2023. There are a few more technical meetings planned to tackle the most complex elements.

After the agreement between the EU institutions, the legislation will be published and come into force about two years later, at the end of 2025 or early 2026. There are still several contentious areas with questions that are yet to be answered. These include: prohibited AI systems and their exemptions for law enforcement and national security, foundation models and GPAI, fundamental rights assessments, the AI Office, the definition of AI, real-world testing of AI systems and the definitive list with high-risk AI systems (Annex III).

The EU AI Act: what are the implications for your business?

The EU AI Act will apply to all the parties involved in the development, deployment, and use of AI. It is therefore applicable across various sectors and will also apply to those outside of the EU if the products are intended to be used in the EU.

Risks and challenges

Member States will enforce the regulation and ensure businesses are compliant. Moreover, penalties may be given for non-compliance with the AI regulation, up to 30 million euros or 6% of the total annual turnover of a company. However, the regulation considers the interests of small-scale providers, start-ups, medium-sized enterprises (SMEs) and their economic viability.

Businesses or public administrations that develop or use AI systems that fall under high-risk, would have to comply with specific requirements and obligations. The risk assessment indicates that compliance with the new rules would cost approximately €6,000 to €7,000 companies for an average high-risk AI system worth €170,000 by 2025. Moreover, additional costs may come for human oversight and verification.

However, businesses that develop or use AI systems that are not high-risk would merely have minimal information obligations.

Opportunities

The EU AI Act will establish a framework that ensures trust in AI for customers and citizens. Additionally, it aims to promote investments and innovations in AI, for example, by providing regulatory sandboxes. These sandboxes establish a controlled environment to test innovative technologies for a limited time under oversight. Special measures are also in place to reduce regulatory burden and to support SMEs and start-ups.

What now?

It is advisable that businesses consider whether they use AI systems or models and asses the risks associated with it. Moreover, businesses should also consider the different obligations and requirements set for AI systems of different risk levels and consider drafting a Code of Conduct. Additionally, businesses could look how to improve AI proficiency by brushing up on AI-related knowledge and competences. Lastly, businesses should actively follow the developments around the EU AI Act and prepare accordingly.

Learn more about our EU AI-related services

Publyon offers tailor-made solutions to navigate the evolving policy environment at the EU level and anticipate the impact of the EU AI-related legislation on your organisation. Would you like to know more about how your organisation can make the most out of the AI legislation in the EU? Make sure you do not miss the latest developments by subscribing to our EU Digital Policy Updates. If you’re intrigued by the EU’s latest efforts to regulate Artificial Intelligence, you can contact our AI expert, Mr. Guillaume Baudour (g.baudour@publyon.com).